Twitter took a (somewhat) principled stand last week. They exhibited some understanding of the fact that product leadership extends beyond creating a product: it also entails product stewardship. By “product stewardship” I mean taking a stand on what people should and shouldn’t be able to do with your product.

Jony Ive put it well when he was interviewed about the iOS feature Screen Time: “If you’re creating something new, it is inevitable there will be consequences that were not foreseen,” he said. “It’s part of the culture at Apple to believe that there is a responsibility that doesn’t end when you ship a product.”

This is an idea I’ve been thinking since I was at eBay. As I wrote a couple years ago:

When I led product at eBay, we wanted to be “a well-lit place to trade.” The company’s mission was “to empower people by connecting millions of buyers and sellers around the world and creating economic opportunity.” That was the intention. But as we scaled, people began to use eBay in ways we hadn’t predicted. At one point people began trading disturbing items, including Nazi memorabilia. As we thought about how to solve it, we asked ourselves a few questions: Who are we? What do we believe? Why did we create this product? Once we framed it in terms of core values, the decision about what to do became clear. The company decided to ban all hate-related propaganda, including Nazi memorabilia.

This week, yet another black man was murdered in broad daylight because of the color of his skin. It makes me ill. And it makes stewardship even more relevant.

Twitter, much like eBay in the past, asked themselves who they were, that they believed in, and why they created the product. But unlike eBay, they were not bold. Instead, they took a baby step forward last week and exhibited that they were finally willing to face the consequences for taking a (little) stand.

Twitter has a set of rules and policies. They include:

- You may not threaten violence against an individual or a group of people. We also prohibit the glorification of violence.

- You may not threaten or promote terrorism or violent extremism

- You may not engage in the targeted harassment of someone, or incite other people to do so.

- You may not promote violence against, threaten, or harass other people on the basis of race, ethnicity, national origin, caste, sexual orientation, gender, gender identity, religious affiliation, age, disability, or serious disease.

And until last week, Twitter had declined to enforce these policies when it came to one user: Donald Trump. Twitter’s reasoning was that as the President, his tweets were of public interest, and different rules applied to him. And so for years, Twitter allowed the most powerful man to:

- threaten violence

- threaten or promote extremism

- engage in harassment

- promote violence and threaten harassment on the basis of race

Twitter allowed the product they had built with love and care to be abused and defiled. And then, last week, Donald Trump went too far, even for Twitter.

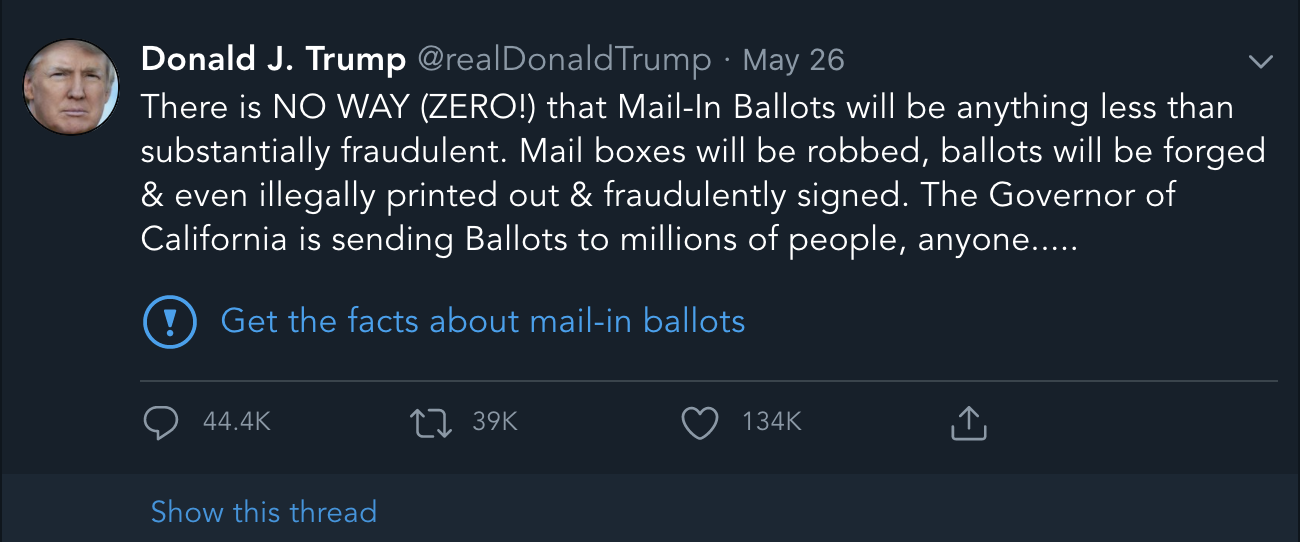

First, Trump tweeted falsehoods about mail-in ballots. This violated Twitter’s Election Misinformation policies which have existed since 2018. So, Twitter enforced their policies and added a misinformation label to his tweet.

Since Trump is also a child (and a dictator wannabe), he went after a Twitter policy employee who received numerous death threats as a result. He also threatened to revoke Section 230.

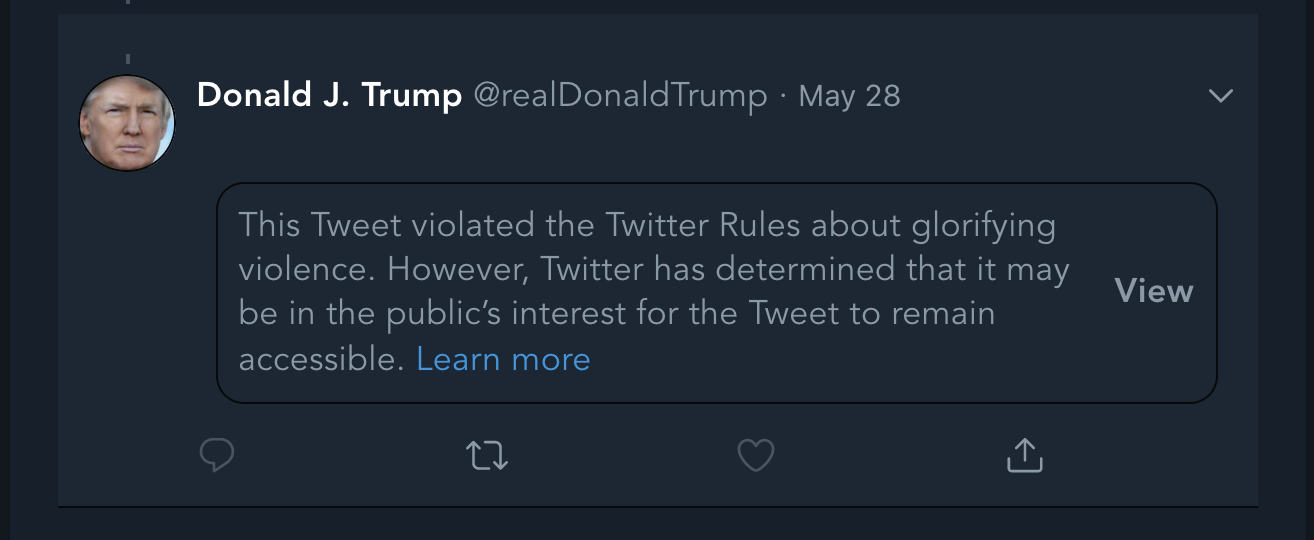

Second, Trump used language that included a threat of violence, which he has done before. This time, finally, Twitter hid the tweet behind a warning.

This immediately divided the Twitterverse. Many, who felt this small step was long overdue, were glad. Others felt that this was a huge violation of free speech.

I disagree with the second group. Twitter is a private company, which means the First Amendment does not apply. The First Amendment applies to the government and how it deals with citizens’ First Amendment rights. A private company can say “No shirt, No service”, or a restaurant can ask someone to leave if they start cursing loudly. In addition, Twitter actually has rules and policies that they have not been enforcing with Trump. Those rules aren’t new. Starting to enforce the existing rules fairly, and applying them to all users, is not a restriction. If Trump were not President, he’d have been suspended a long time ago.

So, at least they did something. They decided to enforce a version of the rules. Sort of lame. But better than nothing (our new low standard).

Meanwhile the other big platform in tech land is, of course, Facebook. They also have rules and they decided that the rules do not apply to Trump. They gave the racist-in-chief carte blanche. They effectively and fully caved. And with that, they picked a side.

As I said in my piece —

As product leaders, we all want people to love what we create. But people often use our products in ways we never could have predicted. Once we release something into the world, it belongs to the users — and sometimes they use our products in unexpected and negative ways. We can’t be held responsible for what they do with it… right?

We have become painfully aware of what can happen when the tools we use encourage our worst instincts and amplify the most virulent voices. In past few months, there have been several violent efforts where the suspects behind them had been vocal about their beliefs on social media. Do the platforms really have no control over the ways in which their products are used? That feels both naive and untrue.

My friend Ashita Achuthan, who used to worked at Twitter, said this: “Technology’s ethics mirrors society’s ethics. As technologists we apply a set of trade offs to the design decisions we make. While we are responsible for thinking through the second and third order effects of our choices, it is impossible to predict every use of our products. However, once a new reality emerges, it is our responsibility to ask who has the power to fix things. And then fix them.”

To be clear, these decisions are not easy because these are complex problems. There are legal considerations, there are social considerations, there are moral and ethical considerations. When platforms are used globally, these decisions are hard to rush. Policy teams, business teams, and product teams agonize over where to draw the line and the unintended consequences of these decisions. As I tweeted on Thursday night, regardless of the decision, people will criticize it. But at the end of the day, these decisions have to be made. That is the job when you run a company like this.

In the past week, two white male CEOs—Jack Dorsey and Mark Zuckerberg—made two different choices. They have shown us who they are. It is now up to us (the users, the employees, the investors) to decide if we want to support that.

What can one do? If I use a platform that puts the most vulnerable at risk, I can stop using the platform. If I work at a company, I can evaluate whether my values and those of leadership match—my time matters, where I work and who I enrich with my work matters. If our values are aligned, and it’s a disagreement on tactics, I can try to convince the leadership to see the logic of my argument. If my values and those of the leadership are not aligned (and if I am in a position to do so) I might consider leaving to work at a company which is more aligned with my values. And if I am an investor in company that doesn’t share my values, I can sell my shares.

The United States of America was built on abusing black men, women, and children (in addition, of course, to Native Americans). That some of our fellow citizens live in fear and get killed on a whim is not acceptable. We should not allow this to keep happening. If you live in America, whether you were born here or came here later in life (like I did), everything we have is built on top of black bodies.

It is our jobs, as human beings, as leaders, to stand up for what’s right. And it is not right that the biggest bully in our country can use the platforms that were built to connect people to threaten and intimidate the most vulnerable. The leaders of these companies need to be stewards of their platforms and make sure the platforms are not used to harm people in the real world.

Thank you to Ashita Achuthan for reading drafts of this post.

This is the post and video on Product Leadership is Product Stewardship.